Research interests

I am interested in understanding how neurons compute, particularly how they represent information at the population level and how interactions at the single-neuron level influence this representation. My background in engineering physics colors my approach to this question, but I work best in interdisciplinary settings integrating many different perspectives on neuroscientific problems.

Population-level encoding and representational drift

Some populations of neurons can change their behavioral tuning gradually over days, even in the absence of overt learning. The rate of this drift varies considerably from neuron to neuron, raising the question of what factors about their activity may be predictive of their tuning stability. During a six-month stay at the University of Cambridge, I worked with Timothy O’Leary’s group, under the supervision of Mónika Józsa and Michael E. Rule, to understand how noise correlations and their effect on information encoding relate to tuning stability. More details are available in our preprint.

My current work in the Sprekeler Lab continues this collaboration with Timothy O’Leary and builds on our findings by exploring the role of inhibition in representational drift, primarily through neural circuit models.

Bio-inspired and material computing

My PhD research was part of the SOCRATES project and was also aligned with the goals of NordSTAR, where I coordinated a satellite group on Material Computing. Along with my colleagues at the Living Technology Lab, led by Stefano Nichele, I worked toward translating the findings from my research to novel computational models and hardwares that are energy efficient, capable of learning, and robust against component failure. My work on studying the dynamics and flow of information in neural systems will inform the development of models emulating the behavior we observe in biological systems.

In one such project, I supervised a team of talented master students in applying evolutionary computation to produce models that showed patterns of activity similar to those observed in experimental data obtained from living neurons in vitro. Our paper on this project was awarded runner-up for the IEEE Brain Award at the 2020 IEEE Symposium Series on Computational Intelligence. (OsloMet news coverage.) In a separate master thesis project from one of these master students, Jørgen Jensen Farner, we studied delay learning in spiking neuron models, inspired by findings on axonal computation and temporal coding.

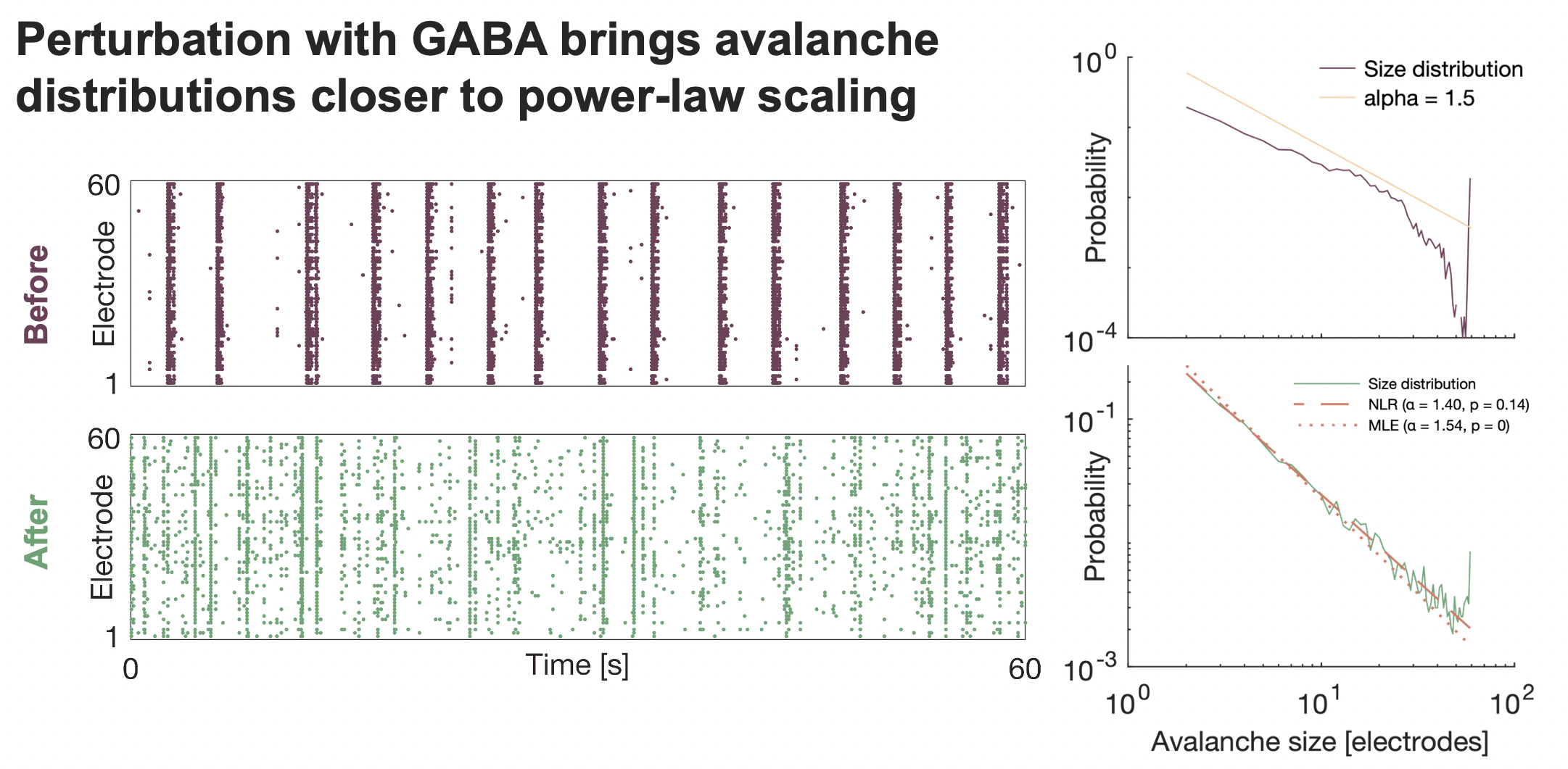

Signatures of criticality in neural systems

One focus of my PhD research has been studying the question of neural computation through the lens of criticality. The critical state, poised between order and disorder, shows many features associated with good computational performance, such as maximal dynamic range and long-range spatiotemporal correlations. I have been working to evaluate the closeness to criticality of networks of neurons in vitro and understanding if this closeness can be correlated with other aspects of network behavior, such as patterns of functional connectivity.

Signal propagation in vitro

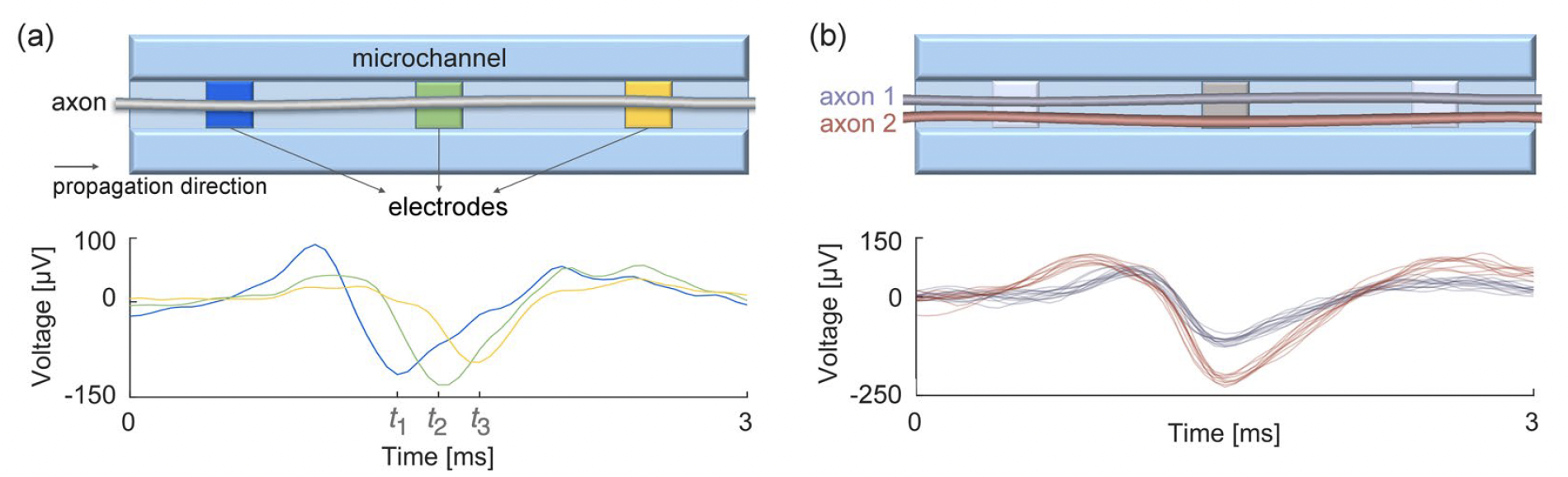

I first became interested in neuroscience during my master thesis work with the Neuroengineering and Computational Neuroscience group (NCN), led by Paulo Aguiar, at the Institute for Research and Innovation in Health (i3S) in Porto, Portugal. Working with this group gave me a wonderful introduction to neuroscience, starting with understanding the Hodgkin–Huxley model and the electrical basis of neural activity and expanding into experimental work with neural cell culture and microelectrode array (MEA) electrophysiology. For my thesis work, I developed a computational tool for the analysis of electrophysiological signals traveling along axons confined to microfluidic tunnels. This tool provides an intuitive audiovisual interface with the data and performs propagation velocity calculation and simple graphical spike sorting, and it has since been used for a number of publications by NCN.